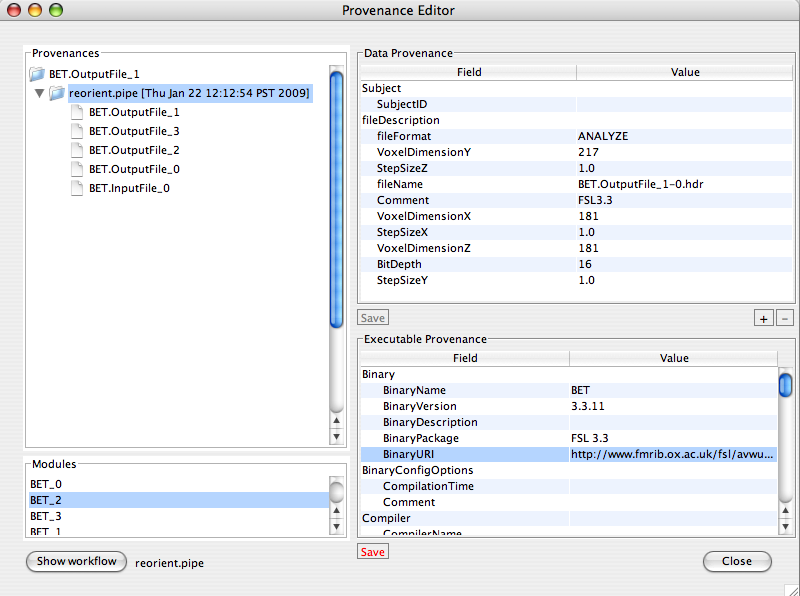

Provenance

LONI Pipeline 4.2 includes a provenance manager, which enables tracking data, workflow and execution history of all processes. This functionality improves the communication, reproducibility and validation of newly proposed experimental designs, scientific analysis protocols and research findings. This includes the ability to record, track, extract, replicate and evaluate the data and analysis provenance to enable rigorous validation and comparison of classical and novel design paradigms.

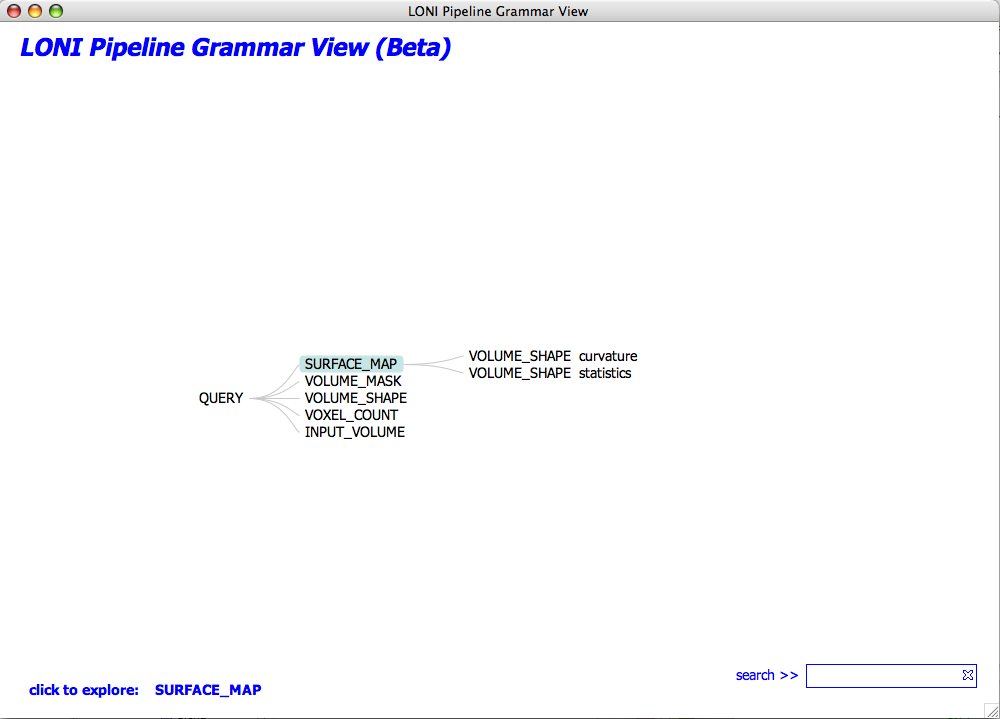

Intelligence

A new intelligence feature of the LONI Pipeline enables the automated construction of elaborate, functional and valid workflows. It uses the spectra of available module descriptors and pipeline workflows to automatically generate valid versions of new graphical protocols according to a set of user-specified keywords. This intelligence feature uses a grammar on the set of XML module and pipeline descriptions to determine the most appropriate analysis protocol, and its corresponding module inputs and outputs, according to the keywords provided by the user. Then, it exports a .pipe file, which contains a draft of the desired analysis protocol.

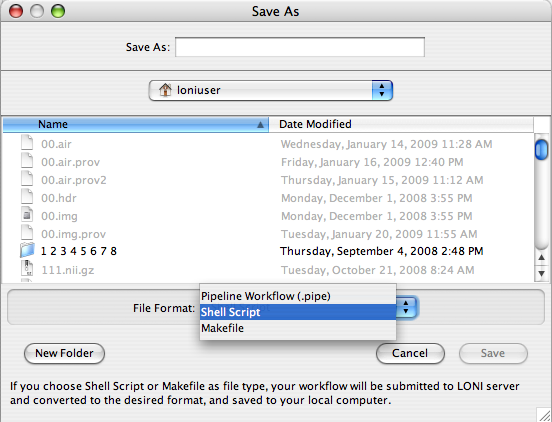

Scripting

Pipeline workflows (.pipe files) may be exported as script. This enables trivial inclusion of pipeline protocols in external scripts and integration into other applications. Currently, the LONI Pipeline allows exporting of any workflow from XML (*.pipe) format to a makefile or a bash script for direct or queuing execution.

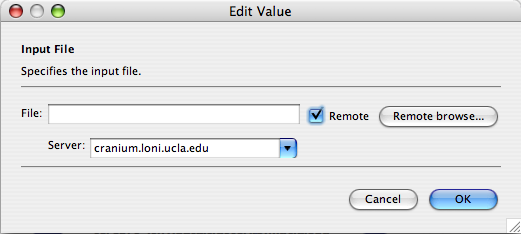

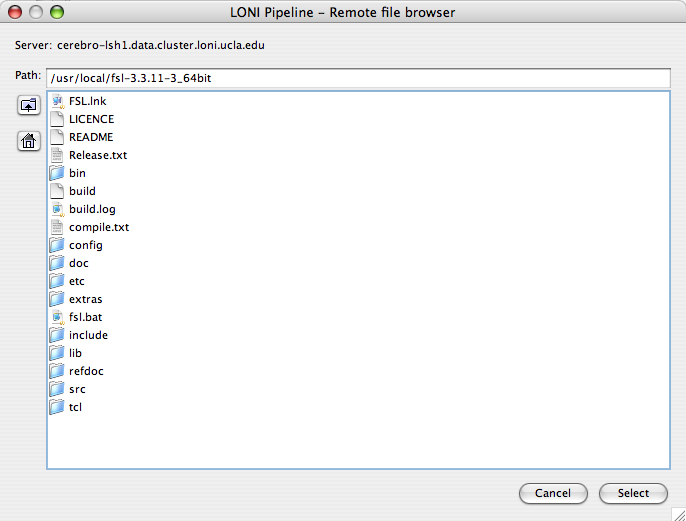

Remote File Browser

Now with Remote File Browser you will be able to browse remotely the files located on the server and select them as executable locations or parameter values for inputs and outputs. This feature appears when you check the “Remote” checkbox and click on the “Remote browse…” button or for some cases like data sources simply by clicking Add button and selecting Remote file.

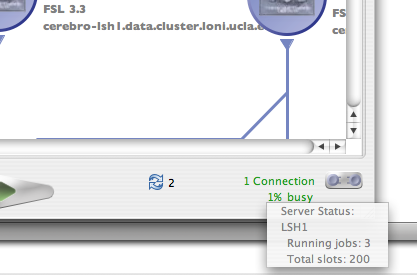

Server Status

Pipeline 4.2 gives information about the status of the server(s) connected. Information about how busy the server is, how many total slots are available and how many jobs are currently being executed on the server. All this information appears at the bottom right corner of Pipeline window when you connect to the server.

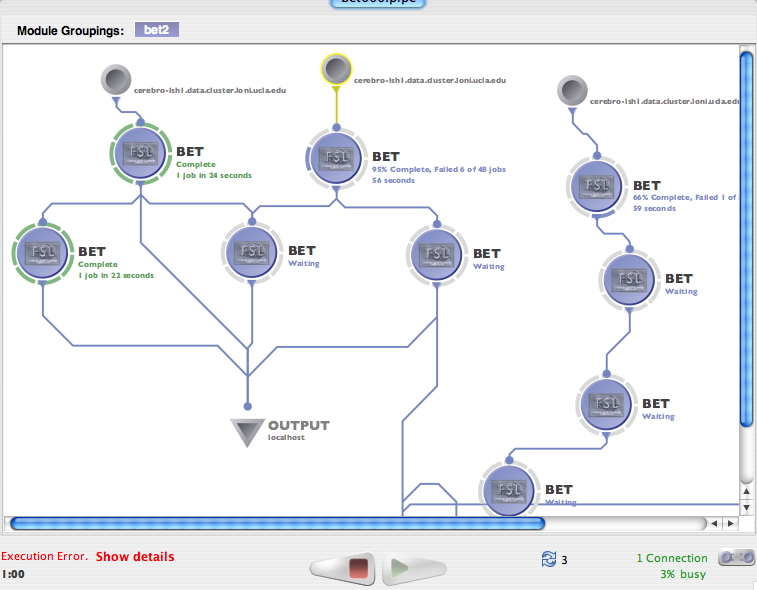

Continue execution with failed instances

In previous version Pipeline stopped the workflow when there were some failed instances. In this version it will continue to complete whole workflow execution for succeeded instances after marking failed instances. If all instances of the module fail, Pipeline will mark the module as failed and will mark all dependent modules on this module as “Cancelled”.

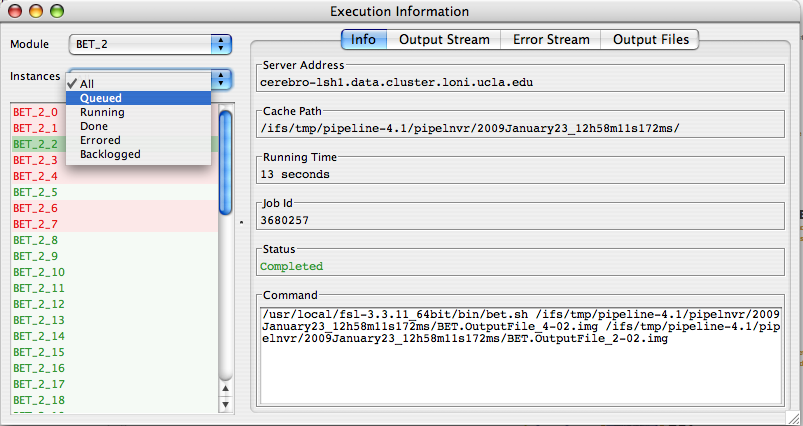

New Statuses

There are new statuses which will give more detailed information about the modules. In execution logs dialog the instances are colored with different colors appropriate to their statuses. Also if you want to see more detailed information about the module without clicking anything just hover the mouse on the module and popup detailed box will appear.

Here are the new statuses and their explanations

Initializing – Appears when instance is preparing for submission

Queued – Appears when instance already submitted the job and it is on the queue

Running – Appears when job starts execution

Backlogged – Appears when maximum job count has been reached for current server and the server temporary blocked the instance until new slots available

Staging – Appears when transferring files to/from server from/to localhost

Incomplete – Appears when module has finished its execution and some instances failed

Cancelled – Appears when parents of current module failed and continuation of current module became pointless

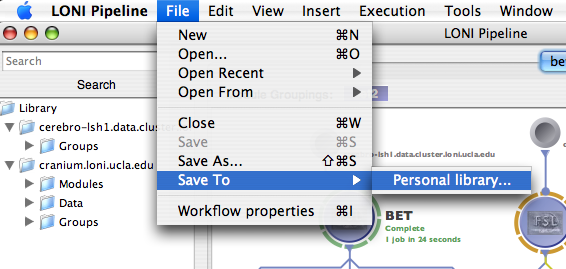

Save To and Open From Personal Library

In previous versions users had to go and manually put the workflows into the personal library location. Pipeline 4.2 allows you to save and open workflows directly to/from Personal library, even without knowing the location of it.

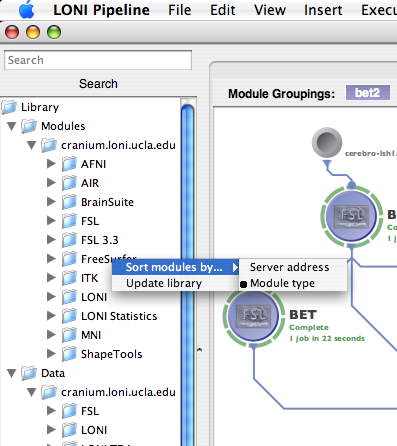

Server Library functions

Server library became more stable and it is now supporting workflows from multiple servers. Now you can easily sort library items “by Module Type” or “by Server address”. Also you can manually right click and call Update library function which will automatically connect to servers included in your current server library and update the Server Library Content on your local computer. If you remove your library content and run Update library command, Pipeline will check for your current connections and will update only from servers you are connected to.

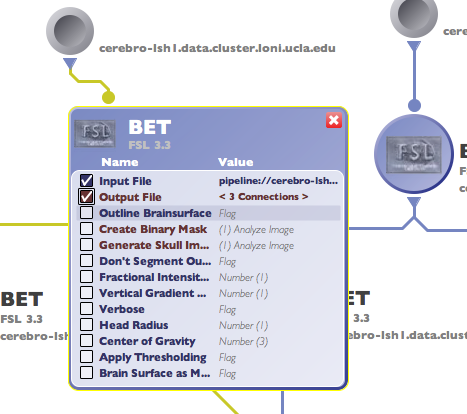

3 State buttons and Exported Parameter’s new look

Now when you open module’s parameter box you will notice new changes there. The button is multi functional and has 3 states which are Disabled, Enabled and Exported. When the checkbox is not checked, parameter is disabled and Pipeline will not require to input value for that parameter. If checkbox is checked, parameter is Enabled and Pipeline will require to input value for that parameter. Finally when checkbox is checked and has double line, it means that current parameter is exported.

Server Failover

Failover capabilities have been implemented in Pipeline 4.2, improving robustness and minimizing service disruptions in the case of a single Pipeline server failure. This was achieved by using two actual servers, a primary and a secondary, a virtual Pipeline Server name, and de-coupling and running the Persistence Database on a separate system. The two servers monitor the state of its counterpart. In the event that the primary server with the virtual Pipeline server name has a catastrophic failure, the secondary server will assume the virtual name, establish a connection to the Persistence Database and take ownership of all current Pipeline jobs dynamically. This feature is available for servers located on Unix systems only.

Directory-based executable access control

To improve security, directory-based Boolean access control for permitted executables was implemented. Only executables found in authorized directories, the sandbox, on the Pipeline server are allowed to execute on the Cranium cluster. To accommodate trusted power users with executables in non-standard locations, provisions for user-based exceptions to this access control mechanism were included.